DRBD Primary/Primary using GFS

May 23, 2008

May 23, 2008

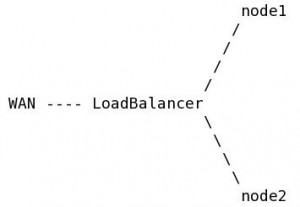

My goal by using DRBD as Primary/Primary with GFS is to load balance a http service, my servers looks like the following:

i use the GFS partition as document-root for my webserver (Apache).

maybe it’s better to use SAN as storage but it’s so expensive, another solutions maybe iSCSI or GNBD but also it’s need more servers which needs extra money 🙂

maybe in the future i will implement it using SAN, iSCSI or GNBD but for now it’s good with DRBD and GFS as two nodes with load balancer and it’s fast enough.

for testing and preparing this quick howto i used Xen to create 2 virtual machine and centos 5 as OS. the partition that i want to use as GFS is named xvdb1, make sure that your partition don’t contain any data you want (it will be destroyed)

to destroy the partition i used this command in the two nodes:

dd if=/dev/zero of=/dev/xvdb1

change /dev/xvdb1 to your partition (make sure it doesn’t contain any needed data).

the following commands have to be done in the two nodes, for simplicity i use the output of one machine

* download DRBD on node1 & node2:

[root@node1 ~]# mkdir downloads

[root@node1 ~]# cd downloads/

[root@node1 downloads]# wget -c http://oss.linbit.com/drbd/8.2/drbd-8.2.5.tar.gz

* untar it:

[root@node1 downloads]# time tar -xzpf drbd-8.2.5.tar.gz -C /usr/src/

real 0m0.162s

user 0m0.016s

sys 0m0.028s

[root@node1 downloads]# ls /usr/src/

drbd-8.2.5 redhat

* before building DRBD:

before you start, make sure you have the following installed in your system:

– make, gcc, the glibc development libraries, and the flex scanner generator must be installed

– kernel-headers and kernel-devel:

[root@node1 downloads]# yum list kernel-*

Loading “installonlyn” plugin

Setting up repositories

Reading repository metadata in from local files

Installed Packages

kernel.i686 2.6.18-8.el5 installed

kernel-headers.i386 2.6.18-8.el5 installed

kernel-xen.i686 2.6.18-8.el5 installed

kernel-xen-devel.i686 2.6.18-8.el5 installed

Available Packages

kernel-PAE.i686 2.6.18-8.el5 local

kernel-PAE-devel.i686 2.6.18-8.el5 local

kernel-devel.i686 2.6.18-8.el5 local

kernel-doc.noarch 2.6.18-8.el5 local

remember that i use Xen kernel.

* building DRBD:

– building DRBD kernel module:

[root@node1 downloads]# cd /usr/src/drbd-8.2.5/drbd

[root@node1 drbd]# make clean all

.

.

.

mv .drbd_kernelrelease.new .drbd_kernelrelease

Memorizing module configuration … done.

[root@node1 drbd]#

– checking the new kernel module:

[root@node1 drbd]# modinfo drbd.ko

filename: drbd.ko

alias: block-major-147-*

license: GPL

description: drbd – Distributed Replicated Block Device v8.2.5

author: Philipp Reisner <phil@linbit.com>, Lars Ellenberg <lars@linbit.com>

srcversion: E325FBFE020C804C4FABA31

depends:

vermagic: 2.6.18-8.el5xen SMP mod_unload 686 REGPARM 4KSTACKS gcc-4.1

parm: minor_count:Maximum number of drbd devices (1-255) (int)

parm: allow_oos:DONT USE! (bool)

parm: enable_faults:int

parm: fault_rate:int

parm: fault_count:int

parm: fault_devs:int

parm: trace_level:int

parm: trace_type:int

parm: trace_devs:int

parm: usermode_helper:string

[root@node1 drbd]#

– Building a DRBD RPM package

[root@node1 drbd]# cd /usr/src/drbd-8.2.5/

[root@node1 drbd-8.2.5]# make rpm

.

.

.

You have now:

-rw-r–r– 1 root root 142722 May 23 11:45 dist/RPMS/i386/drbd-8.2.5-3.i386.rpm

-rw-r–r– 1 root root 232238 May 23 11:45 dist/RPMS/i386/drbd-debuginfo-8.2.5-3.i386.rpm

-rw-r–r– 1 root root 851602 May 23 11:45 dist/RPMS/i386/drbd-km-2.6.18_8.el5xen-8.2.5-3.i386.rpm

[root@node1 drbd-8.2.5]#

– installing DRBD:

[root@node1 drbd-8.2.5]# cd dist/RPMS/i386/

[root@node1 i386]# rpm -ihv drbd-8.2.5-3.i386.rpm drbd-km-2.6.18_8.el5xen-8.2.5-3.i386.rpm

Preparing… ########################################### [100%]

1:drbd ########################################### [ 50%]

2:drbd-km-2.6.18_8.el5xen########################################### [100%]

* Configuring DRBD:

– for lower-level storage i use a simple setup, both hosts have a free (currently unused) partition named /dev/xvdb1 and i use internal meta data.

– for /etc/drbd.conf i use this configuration:

resource r0 {

protocol C;

startup {

become-primary-on both;

}

net {

allow-two-primaries;

cram-hmac-alg “sha1”;

shared-secret “123456”;

after-sb-0pri discard-least-changes;

after-sb-1pri violently-as0p;

after-sb-2pri violently-as0p;

rr-conflict violently;

}

syncer {

rate 44M;

}

on node1.test.lab {

device /dev/drbd0;

disk /dev/xvdb1;

address 192.168.1.1:7789;

meta-disk internal;

}

on node2.test.lab {

device /dev/drbd0;

disk /dev/xvdb1;

address 192.168.1.2:7789;

meta-disk internal;

}

}

note that “become-primary-on both” startup option is needed in Primary/Primary configuration.

* starting DRBD for the first time:

the following steps must be performed on the two nodes:

– Create device metadata

[root@node1 i386]# drbdadm create-md r0

v08 Magic number not found

v07 Magic number not found

v07 Magic number not found

v08 Magic number not found

Writing meta data…

initialising activity log

NOT initialized bitmap

New drbd meta data block sucessfully created.

–== Creating metadata ==–

As with nodes we count the total number of devices mirrored by DRBD at

at http://usage.drbd.org.

The counter works completely anonymous. A random number gets created for

this device, and that randomer number and the devices size will be sent.

http://usage.drbd.org/cgi-bin/insert_usage.pl?nu=18231616900827588600&ru=15113975333795790860&rs=2147483648

Enter ‘no’ to opt out, or just press [return] to continue:

success

– Attach. This step associates the DRBD resource with its backing device:

[root@node1 i386]# modprobe drbd

[root@node1 i386]# drbdadm attach r0

– verify running DRBD:

on node1:

[root@node1 i386]# cat /proc/drbd

version: 8.2.5 (api:88/proto:86-88)

GIT-hash: 9faf052fdae5ef0c61b4d03890e2d2eab550610c build by root@node1.test.lab, 2008-05-23 11:45:23

0: cs:StandAlone st:Secondary/Unknown ds:Inconsistent/Outdated r—

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0

resync: used:0/31 hits:0 misses:0 starving:0 dirty:0 changed:0

act_log: used:0/127 hits:0 misses:0 starving:0 dirty:0 changed:0

on node2:

[root@node2 i386]# cat /proc/drbd

version: 8.2.5 (api:88/proto:86-88)

GIT-hash: 9faf052fdae5ef0c61b4d03890e2d2eab550610c build by root@node2.test.lab, 2008-05-23 12:58:18

0: cs:StandAlone st:Secondary/Unknown ds:Inconsistent/Outdated r—

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0

resync: used:0/31 hits:0 misses:0 starving:0 dirty:0 changed:0

act_log: used:0/127 hits:0 misses:0 starving:0 dirty:0 changed:0

– Connect. This step connects the DRBD resource with its counterpart on the peer node:

[root@node1 i386]# drbdadm connect r0

[root@node1 i386]# cat /proc/drbd

version: 8.2.5 (api:88/proto:86-88)

GIT-hash: 9faf052fdae5ef0c61b4d03890e2d2eab550610c build by root@node1.test.lab, 2008-05-23 11:45:23

0: cs:WFConnection st:Secondary/Unknown ds:Inconsistent/Outdated C r—

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0

resync: used:0/31 hits:0 misses:0 starving:0 dirty:0 changed:0

act_log: used:0/127 hits:0 misses:0 starving:0 dirty:0 changed:0

– initial device synchronization for the first time:

the following step must done just on one node, i used node1:

[root@node1 i386]# drbdadm — –overwrite-data-of-peer primary r0

– verify:

[root@node1 i386]# cat /proc/drbd

version: 8.2.5 (api:88/proto:86-88)

GIT-hash: 9faf052fdae5ef0c61b4d03890e2d2eab550610c build by root@node1.test.lab, 2008-05-23 11:45:23

0: cs:SyncSource st:Primary/Secondary ds:UpToDate/Inconsistent C r—

ns:792 nr:0 dw:0 dr:792 al:0 bm:0 lo:0 pe:0 ua:0 ap:0

[>………………..] sync’ed: 0.2% (2096260/2097052)K

finish: 2:11:00 speed: 264 (264) K/sec

resync: used:0/31 hits:395 misses:1 starving:0 dirty:0 changed:1

act_log: used:0/127 hits:0 misses:0 starving:0 dirty:0 changed:0

[root@node2 i386]# cat /proc/drbd

version: 8.2.5 (api:88/proto:86-88)

GIT-hash: 9faf052fdae5ef0c61b4d03890e2d2eab550610c build by root@node2.test.lab, 2008-05-23 12:58:18

0: cs:SyncTarget st:Secondary/Primary ds:Inconsistent/UpToDate C r—

ns:0 nr:1896 dw:1896 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0

[>………………..] sync’ed: 0.2% (2095156/2097052)K

finish: 2:02:12 speed: 268 (268) K/sec

resync: used:0/31 hits:947 misses:1 starving:0 dirty:0 changed:1

act_log: used:0/127 hits:0 misses:0 starving:0 dirty:0 changed:0

By now, our DRBD device is fully operational, even before the initial synchronization has completed. we can now continue to configure GFS…

– Configuring your nodes to support GFS

before we can configure GFS, we need a littel help from RHCS, the following packages is needed to be installed on the systems:

– “cman” ( RedHat Cluster Maneger)

– “lvm2-cluster” (LVM with Cluster support)

– “gfs-utils” or “gfs2-utils” (GFS1 Utils or GFS2 Utils, as write of this document, i prefer GFS1)

– “kmod-gfs” or “kmod-gfs-xen” for Xen (GFS kernel module)

* we must enable and start the following system services on both nodes:

– cman : it will run ccsd, fenced, dlm and openais.

– clvmd.

– gfs.

starting cman:

before we can start cman, we have to conigure /etc/cluster/cluster.conf i use the following configration:

<?xml version=”1.0″?>

<cluster name=”my-cluster” config_version=”1″>

<cman two_node=”1″ expected_votes=”1″>

</cman>

<clusternodes>

<clusternode name=”node1.test.lab” votes=”1″ nodeid=”1″>

<fence>

<method name=”single”>

<device name=”human” ipaddr=”192.168.1.1″/>

</method>

</fence>

</clusternode>

<clusternode name=”node2.test.lab” votes=”1″ nodeid=”2″>

<fence>

<method name=”single”>

<device name=”human” ipaddr=”192.168.1.2″/>

</method>

</fence>

</clusternode>

</clusternodes>

<fence_devices>

<fence_device name=”human” agent=”fence_manual”/>

</fence_devices>

</cluster>

after editing /etc/cluster/cluster.conf we have to start it in the two nodes in the same time:

on the node1:

[root@node1 i386]# /etc/init.d/cman start

Starting cluster:

Loading modules… done

Mounting configfs… done

Starting ccsd… done

Starting cman… done

Starting daemons… done

Starting fencing… done

[ OK ]

on the node2:

[root@node2 i386]# /etc/init.d/cman start

Starting cluster:

Loading modules… done

Mounting configfs… done

Starting ccsd… done

Starting cman… done

Starting daemons… done

Starting fencing… done

[ OK ]

check nodes:

[root@node1 i386]# cman_tool nodes

Node Sts Inc Joined Name

1 M 4 2008-05-23 14:33:25 node1.test.lab

2 M 316 2008-05-23 14:41:34 node2.test.lab

in the ‘Sts’ column the ‘M’ means that every thing is going fine, if it’s ‘X’ then there is a problem happend..

– starting CLVMD:

first we need to change locking type in /etc/lvm/lvm.conf to 3 in the two nodes:

vi /etc/lvm/lvm.conf

change locking_type = 1 to locking_type = 3

we also need to change the filter option to let vgscan don’t see the duplicated PV (duplicate PV will happen because our xvdb1 will be the backend for drbd0) i changed filter like this

#filter = [ “a/.*/” ]

filter = [ “a|xvda.*|”, “a|drbd.*|”, “r|xvdb.*|” ]

in my filter option, “a|xvda.*|” means add all xvda partition, “a|drbd.*|” means add all drbd partition, and “r|xvdb.*|” means remove (ignore) all xvdb partition (one of them is our partition which is xvdb1)

save and exit..

the first thing to do is vgscan, so it’s read the new configuration:

[root@node1 i386]# vgscan

Reading all physical volumes. This may take a while…

Found volume group “VolGroup00” using metadata type lvm2

– the following commands must done in one node, i used node1 –

now create our PV:

[root@node1 i386]# pvcreate /dev/drbd0

Physical volume “/dev/drbd0” successfully created

creating our volume group:

[root@node1 i386]# vgcreate my-vol /dev/drbd0

Volume group “my-vol” successfully created

[root@node1 i386]# vgdisplay

— Volume group —

VG Name my-vol

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 1

VG Access read/write

VG Status resizable

Clustered yes

Open LV 0

Max PV 0

Cur PV 1

Act PV 1

VG Size 2.00 GB

PE Size 4.00 MB

Total PE 511

Alloc PE / Size 0 / 0

Free PE / Size 511 / 2.00 GB

VG UUID UaUK5v-P3aX-nmCn-Oj3F-XQox-AgxB-UsM0xS

did you noticed Clustered yes?

creating our lv:

[root@node1 i386]# lvcreate -L1.9G –name my-lv my-vol

Rounding up size to full physical extent 1.90 GB

Error locking on node node2.test.lab: device-mapper: reload ioctl failed: Invalid argument

Failed to activate new LV.

creating the GFS:

[root@node1 i386]# gfs_mkfs -p lock_dlm -t my-cluster:www -j 2 /dev/my-vol/my-lv

This will destroy any data on /dev/my-vol/my-lv.

Are you sure you want to proceed? [y/n] y

Device: /dev/my-vol/my-lv

Blocksize: 4096

Filesystem Size: 433092

Journals: 2

Resource Groups: 8

Locking Protocol: lock_dlm

Lock Table: my-cluster:www

Syncing…

All Done

start gfs service:

[root@node1 i386]# /etc/init.d/gfs start

mount it on the first node:

[root@node1 i386]# mount -t gfs /dev/my-vol/my-lv /www

[root@node1 i386]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup00-LogVol00

9.1G 3.4G 5.3G 40% /

/dev/xvda1 99M 17M 78M 18% /boot

tmpfs 129M 0 129M 0% /dev/shm

/dev/my-vol/my-lv 1.7G 20K 1.7G 1% /www

[root@node1 i386]# ls -lth /www/

total 0

mount it in the second node:

now you have to wait until the initial device synchronization finish, to check:

[root@node2 i386]# cat /proc/drbd

version: 8.2.5 (api:88/proto:86-88)

GIT-hash: 9faf052fdae5ef0c61b4d03890e2d2eab550610c build by root@node2, 2008-05-23 12:58:18

0: cs:SyncTarget st:Secondary/Primary ds:Inconsistent/UpToDate C r—

ns:0 nr:1970404 dw:1970404 dr:0 al:0 bm:119 lo:0 pe:0 ua:0 ap:0

[=================>..] sync’ed: 93.4% (143276/2097052)K

finish: 0:08:57 speed: 252 (232) K/sec

resync: used:0/31 hits:976756 misses:120 starving:0 dirty:0 changed:120

act_log: used:0/127 hits:0 misses:0 starving:0 dirty:0 changed:0

after it finish we need to change it to primary before we can mount it:

[root@node2 i386]# drbdadm primary r0

[root@node2 i386]# cat /proc/drbd

version: 8.2.5 (api:88/proto:86-88)

GIT-hash: 9faf052fdae5ef0c61b4d03890e2d2eab550610c build by root@node2, 2008-05-23 12:58:18

0: cs:Connected st:Primary/Primary ds:UpToDate/UpToDate C r—

ns:0 nr:2113680 dw:2113680 dr:0 al:0 bm:128 lo:0 pe:0 ua:0 ap:0

resync: used:0/31 hits:1048386 misses:128 starving:0 dirty:0 changed:128

act_log: used:0/127 hits:0 misses:0 starving:0 dirty:0 changed:0

notice “st:Primary/Primary” it’s what we want! 🙂

now to check the volume group:

[root@node2 ~]# vgscan

Reading all physical volumes. This may take a while…

Found volume group “VolGroup00” using metadata type lvm2

Found volume group “my-vol” using metadata type lvm2

mount it!

[root@node2 i386]# /etc/init.d/gfs start

[root@node2 i386]# mkdir /www

[root@node2 i386]# mount -t gfs /dev/my-vol/my-lv /www

/sbin/mount.gfs: can’t open /dev/my-vol/my-lv: No such file or directory

oOoPps do you remember the error “Error locking on node node2.test.lab: device-mapper: reload ioctl failed: Invalid argumen” when we created our LV in the first node? ok easy, restart clvmd in node2 and try remounting it:

[root@node2 i386]# /etc/init.d/clvmd restart

Deactivating VG my-vol: 0 logical volume(s) in volume group “my-vol” now active

[ OK ]

Stopping clvm: [ OK ]

Starting clvmd: [ OK ]

Activating VGs 2 logical volume(s) in volume group “VolGroup00” now active

1 logical volume(s) in volume group “my-vol” now active

[ OK ]

[root@node2 i386]# mount -t gfs /dev/my-vol/my-lv /www

aha, lets touch some data:

[root@node2 i386]# touch /www/hi

[root@node2 i386]# ls -lth /www/

total 8.0K

-rw-r–r– 1 root root 0 May 23 16:35 hi

and from node1:

[root@node1 i386]# ls -lth /www/

total 8.0K

-rw-r–r– 1 root root 0 May 23 16:35 hi

cool right:? try it your self…

May 23, 2008

May 23, 2008

Posted in

Posted in  content rss

content rss

October 3rd, 2008 at 11:32 pm

works great, thx a lot!

October 30th, 2008 at 10:50 pm

You are the CMAN!

Way to go dude 😉

October 31st, 2008 at 2:19 am

😀

December 8th, 2008 at 1:52 am

What happens when a node crashes… does the other one still work?

What happens when the crashed node goes up?

December 8th, 2008 at 12:25 pm

yes, the other node will work without any problem, but when it back online you must do a manually recovery.

if a split brain happened then you need to manually repair it, please see this link:

http://www.drbd.org/users-guide/s-manual-split-brain-recovery.html

December 12th, 2008 at 4:49 pm

i am asking you this because i did same thing last year and the problem for me was that after one of the nodes crashed, the gfs access on the other node also crashed and in the log cman was complaining that he cannot fence the specific node

I’ll try to do the same thing tonight to see what happens.

December 12th, 2008 at 5:52 pm

the only thing that i didn’t use was xen

January 5th, 2009 at 8:16 pm

a7san ishi ana shayfo o ba7keh eno close il laptop o balalak waj3et hal ras bala linux bala shosmo o t3alak isboo3 sheem hawa naqey b china , o bal nalsbeh lal Wan network iza mz3letak lahaldarjeh baswelak yaha bas arwe7 jor 😛 .o ta7yeh o ba3d

February 6th, 2009 at 3:08 pm

Fantastic post!!! Cheers!

February 13th, 2009 at 4:11 am

Now that you have almost a year of using this setup – how stable is it for you?

Did you have cases of one of the nodes crashing (for any reason) and recovering? What did you have to do to get it back?

Thanks.

February 13th, 2009 at 5:29 pm

it was working well for about 6 months, i remember one or two crashes happened, we recovered them just as pointed in drbd.org

for now we expanded the number of nodes, now we use 4 nodes, so drbd didn’t help us in this setup, i used GFS and GNBD from redhat to setup this, 1 GNBD server as storage, and 3 Nodes running Apache.

April 29th, 2009 at 1:40 am

hi-

ive used this setup and doing some tests with drbd. ive noticed that when i write a large file (>500M or so) to /mnt on node1, for example, i/o to the filesystem is stalled on node2 until drbd has completed replication of the file. for instance, if i cp a file to /mnt on node1 it will write at full local I/O speed and complete the write.. however, node1’s I/o is hangng until drbd catches up with the filesystem.. is this normal? is there a way to tweak this? changing drbd protocol perhaps? this is my first setup and have only spend an hour or so testing it. curious..

July 30th, 2009 at 11:45 am

Adam, sorry i didn’t face this thing before, files i had in the system was between 1-10MB;

October 2nd, 2009 at 3:41 am

Hi Shaker, how about heartbeat..? i try to combine it with drbd using ip alias. but i confuse to make them primary-primay node and build a load balancer to distribute the load.. any tutorials may be..? thanks a lot..!

October 4th, 2009 at 3:43 pm

Hello ilyas,

what you are confused of? i think if you don’t really need them load balanced (your application can run in one server) then don’t make them primary and stay with Active/Passive solution.

you need a tut. about what exactly?

October 6th, 2009 at 6:07 am

i’ve succeed make it in primary/secondary mode. but, i want all loads from the clients automatically balanced to both nodes and when one of nodes fails, the active node automatically becomes a primary one..

now i’m building a clustered server for my web and database server. it needs a load balancer to handle the loads from clients and synchronizing data on both nodes. i use heartbeat and drbd.. am i need a GFS service to solve my problems..?

thanks for the solutions..!

October 6th, 2009 at 9:24 am

Cong. on building your Primary/Secondary arch.

>> “but, i want all loads from the clients automatically balanced to both nodes and when one of nodes fails, the active node automatically becomes a primary one..”

what are you asking for is what this document explains, there is no Secondary at all in this setup, two nodes are Primary, but i think you have to consider many problems may appear from this setup, specially when one of the nodes became down, please read more about it.

a Clustered setup means that you need a file system that support clustering, and this why GFS is needed.

I suggest you to stay with Primary/Secondary unless you really needs Primary/Primary setup.

October 7th, 2009 at 1:19 am

ok, i become clear.. thanks for the suggestions.. Are u a network administrator..? Have a nice job Shaik!! and share many nice documents more.. 🙂

thanks for all!

October 7th, 2009 at 8:29 am

Great, you are welcome ,,

No, i am a sysadmin not network guy 😉

September 17th, 2010 at 10:39 am

HI,

Really superb and very usefull for every learner. Thanks a lot. Could you please post the redhar cluster configuration also if you have done.

April 3rd, 2011 at 6:44 am

Hi,

I don’t understand how does load balance work. Who does load balance? .. GFS?

April 5th, 2011 at 2:54 pm

Hi Hamilton, actually I’m not understanding your question, but GFS have nothing with balancing, In this post, the load balancer is hardware load balancer, doing balancing for HTTP between two Apache Nodes.

July 17th, 2014 at 8:19 pm

Hi,

Such a great article you did here, the configuration of drbd is more clear to me now.

I have just some questions because I still have some little points I don’t understand :

– How DRBD is doing the load balancing ? I mean, when a http request is send, how is it allocate to one server or the other ? (To me, it is a server on the front which is doing the load balancing)

– I don’t really understand the utility of the GFS system on a partition. Is it a lock manager ?

I’m sorry if I’m not perfectly clear, I’m trying to translate my thoughts as much as I can. 🙂